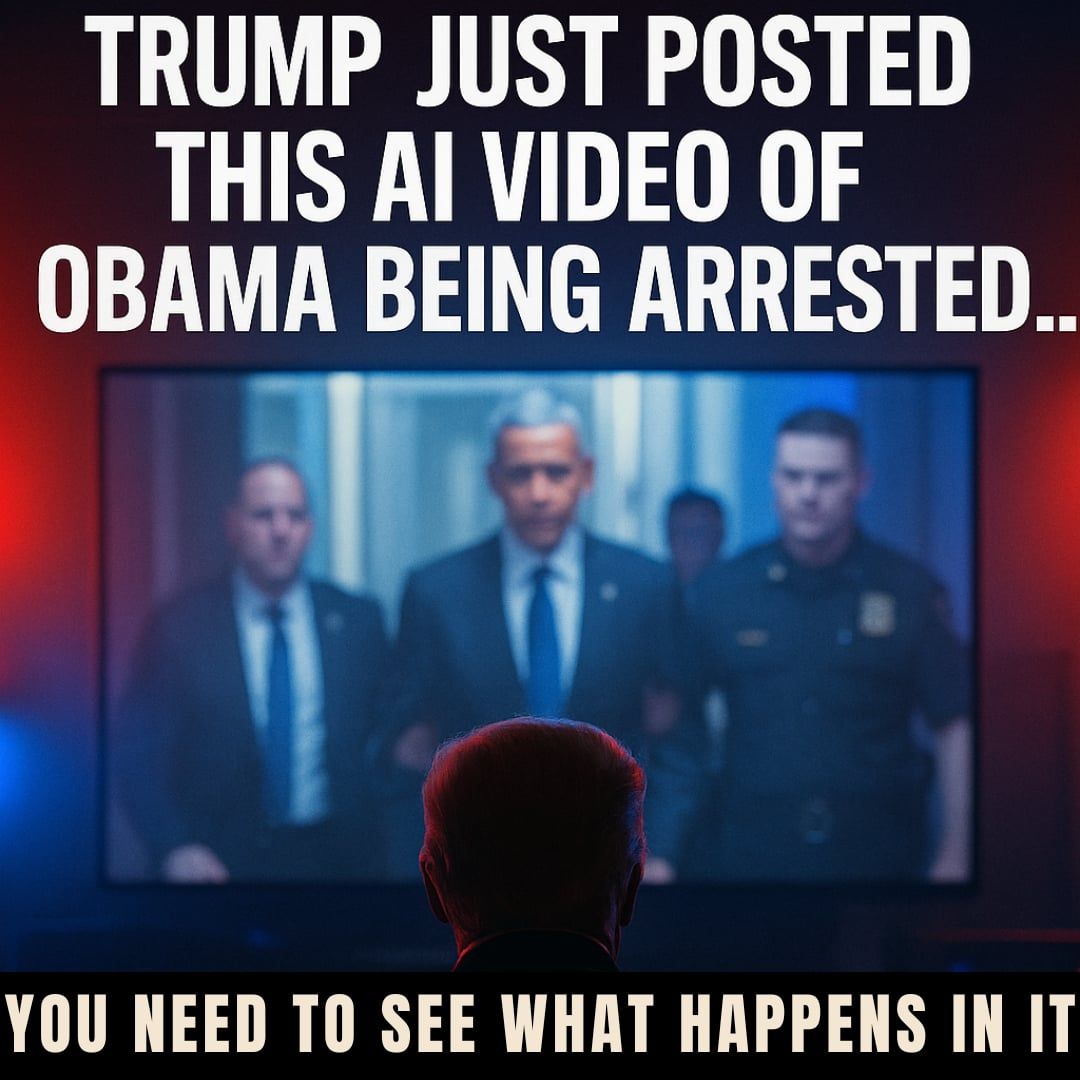

Donald Trump set social media ablaze this week when he shared an unnervingly lifelike AI-generated video showing former President Barack Obama being handcuffed and led away by uniformed officers. In the clip, Obama’s familiar gait and facial expressions are rendered so precisely that at first glance it’s hard to tell it isn’t genuine footage. Yet despite the realism, there is no record of any official arrest—this scenario exists only in the realm of artificial intelligence.

The seven‑second video opened with a tight shot of Obama stepping out of a black SUV, followed by agents snatching his arms behind his back. The soundtrack pulses with dramatic orchestration, heightening the sense that viewers are witnessing a breaking-news moment. Trump offered no explanation or caption beyond hitting “share,” leaving observers to wonder whether he intended it as a political warning, a satirical jab, or simply a provocative stunt designed to dominate the news cycle.

Reactions poured in almost immediately. Critics denounced the clip as reckless misinformation, arguing that even a savvy audience might mistake it for authentic news if seen without context. “This is exactly the kind of content that destroys trust in our institutions,” said Joanne Sinclair, a media ethics professor at Stanford University. “When former presidents appear to be arrested in hyper‑realistic deepfakes, it undermines our ability to distinguish fact from fiction.” Some Trump supporters, however, praised the clip as “predictive programming,” suggesting it hinted at legal troubles they believe Obama should face. A smaller faction applauded the video as “high‑level trolling,” evidence of Trump’s uncanny knack for steering public conversation.

The timing of Trump’s post—just months before the 2026 midterm elections—only deepens the controversy. Experts warn that AI tools capable of fabricating realistic video and audio are now widely accessible, putting manipulated political content within reach of anyone with moderate technical skill. “We’re entering a new era of political warfare,” said Dr. Marcus Lee, a cybersecurity analyst at Georgetown. “Deepfakes like this can be weaponized not only to deceive voters, but to sow chaos and weaken faith in democratic processes.”

Social media platforms have scrambled to respond. Twitter temporarily removed the shared post under its policy against synthetic media that could mislead users, but reinstated it after Trump’s team contested the takedown. Facebook issued a statement acknowledging the clip violated its guidelines on manipulated political content, yet cited freedom‑of‑expression concerns in deciding not to ban it outright. Meanwhile, TikTok and YouTube warned they would label and limit the spread of any similar videos in the future.

Legal scholars are also sounding alarms. Although there are few clear laws governing political deepfakes, some members of Congress are drafting bipartisan legislation to require that any AI‑generated representation of a public figure be clearly marked. “If we don’t act, these tools could erode our public discourse beyond repair,” cautions Representative Julia Martinez, who plans to introduce a bill this fall mandating explicit “deepfake” watermarks.

As Trump’s video continues to circulate, its ultimate impact remains uncertain. Will it shape public opinion against Obama, rally Trump’s base, or simply serve as yet another flashpoint in America’s culture wars? One thing is clear: in an age where algorithms can fabricate history with a few keystrokes, Americans are facing a choice between vigilance and naiveté. Whether you view Trump’s clip as dangerous disinformation, clever political theater, or a bit of both, it underscores a rapidly evolving challenge for voters, platforms, and policymakers alike.